The image documentation includes instructions on how to pull and run the image.

#Prometheus statsd exporter install#

If you have the Golang environment properly set up on your machine, you can install statsd_exporter by issuing: $ go get /prometheus/statsd_exporterĪlternatively, you can deploy statsd_exporter using the prometheus/statsd-exporter container image. Let’s start this section by installing statsd_exporter.

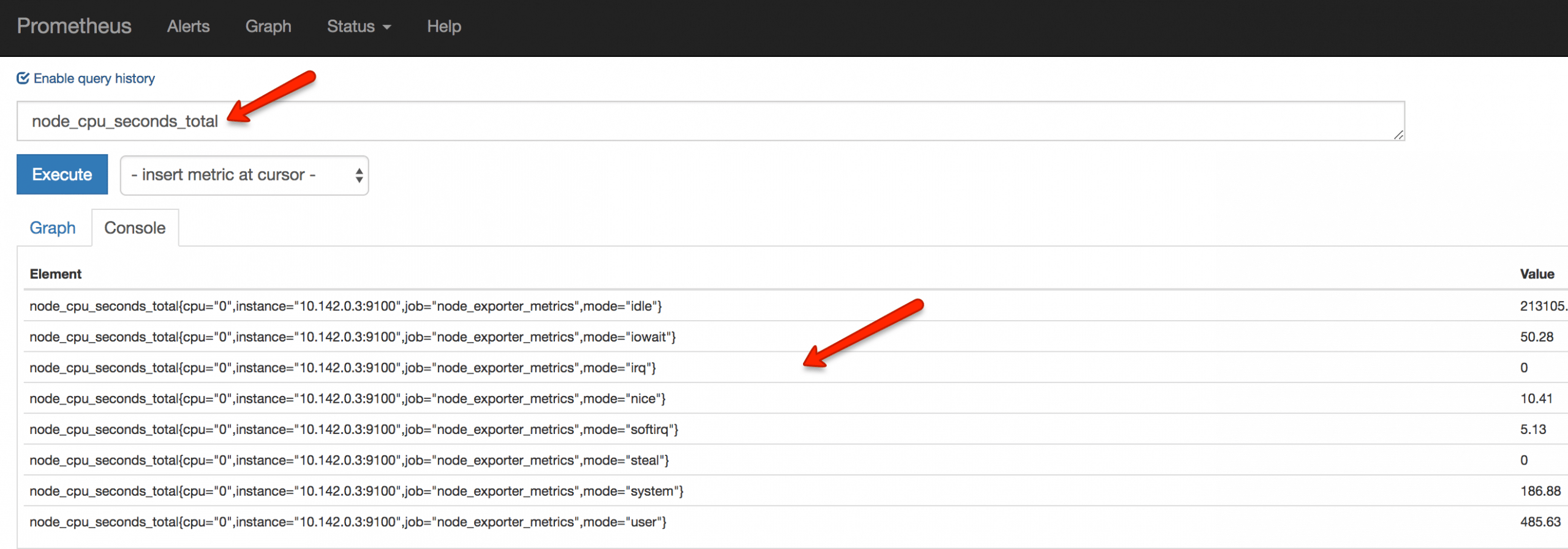

Converting statsd metrics to Prometheus metrics In the next section, we will spin up statsd_exporter, which will collect statsd metrics and export them as Prometheus metrics. Make sure that you activate the Python virtual environment before issuing the commands: $ airflow webserver $ airflow schedulerĪt this point, the Airflow is running and sending statsd metrics to localhost:8125. You may want to run these commands in two separate terminal windows. The last step in this section is to start the Airflow webserver and scheduler process.

We are going to start that server up in the next section. Before saving your changes, the statsd configuration should look as follows: statsd_on = True statsd_host = localhost statsd_port = 8125 statsd_prefix = airflowīased on this configuration, Airflow is going to send the statsd metrics to the statsd server that will accept the metrics on localhost:8125. Turn on the statsd metrics by setting statsd_on = True. Open the Airflow configuration file airflow.cfg for editing: $ vi ~/airflow/airflow.cfg Install Apache Airflow along with the statsd client library: $ pip install apache-airflow $ pip install statsdĬreate the Airflow home directory in the default location: $ mkdir ~/airflowĬreate the Airflow database and the airflow.cfg configuration file: $ airflow initdb First, create a Python virtual environment where Airflow will be installed: $ python -m venv airflow-venvĪctivate the virtual environment: $. In this tutorial, I am using Python 3 and Apache Airflow version 1.10.12. Follow me to the next section, where we are going to start by installing Apache Airflow.

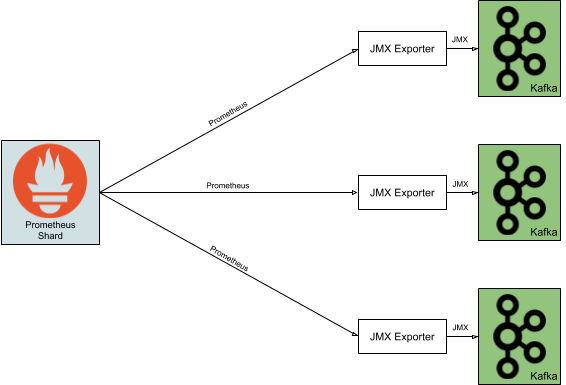

We are going to:Ĭonfigure Airflow to publish the statsd metrics.Ĭonvert the statsd metrics to Prometheus metrics using statsd_exporter.ĭeploy the Prometheus server to collect the metrics and make them available to Grafana.īy the end of the blog, you should be able to watch the Airflow metrics in the Grafana dashboard. The remaining sections of this blog will create the setup depicted in the above diagram. Airflow metrics stored in Prometheus can then be viewed in the Grafana dashboard. This endpoint is periodically scraped by the Prometheus server, which persists the metrics in its database. The statsd_exporter aggregates the metrics, converts them to the Prometheus format, and exposes them as a Prometheus endpoint. The solid line starting at the Webserver, Scheduler, and Worker shows the metrics flowing from these three components to the statsd_exporter. The diagram depicts three Airflow components: Webserver, Scheduler, and the Worker. Overall, the Airflow monitoring diagram looks as follows: The Prometheus server can then scrape the metrics exposed by the statsd_exporter. The statsd_exporter receives statsd metrics on one side and exposes them as Prometheus metrics on the other side. It turns out that the Prometheus project comes with a statsd_exporter that functions as a bridge between statsd and Prometheus. How can the statsd metrics be sent to Prometheus? Our goal though, is to send the metrics to Prometheus. These metrics would normally be received by a statsd server and stored in a backend of choice. Apache Airflow can send metrics using the statsd protocol. This blog post covers a proof of concept, which shows how to monitor Apache Airflow using Prometheus and Grafana.

0 kommentar(er)

0 kommentar(er)